With the todays performance of our computer, the speed of compilation has been improved to the point that distributed building could be slower than local builds (it would take much more to throw your files and their dependency over your network and back than to compile those locally).

However, when working with large projects or when building on a old (or not so powerful) hardware, it would help if the PCs' brotherhood would give you a hand.

If you have 2+ similar systems (i.e. x86_64 vs. x86_64), the initial setup of distcc would be a breeze since both GCC compilers are for the same architecture and should have the same CC prefix (eg. x86_64-pc-linux-gnu-). This kind of setup is widely spread and explained over the Internet.

The problem comes when you attempt to setup different architectures, such as an ARM (on a BCM2835/BCM2708 hardware) and a x86_64 for instance. Since this was a real experiment I've done, in the following I will refer to it as following:

- The ARM will be the system you are compiling for (the HOST)

- the x86_64 will be the system where you distribute the building task (the SLAVE)

First drawback with this setup is that, having (two) different hardware architectures between the HOST and SLAVE(s), you are going to need a cross-compiler on SLAVE(s) capable to produce code for the HOST architecture. Note that the cross-compiler you will install on the SLAVE(s) will have its own CC prefix (eg. armv6-rpi-linux-gnueabi-).

Other drawback I've encountered was that on the HOST the CC prefix is armv6j-hardfloat-linux-gnueabi- while on SLAVE it is armv6-rpi-linux-gnueabi-. Every time the HOST will ask the SLAVE(s) to give it a hand with some files, the HOST will instruct the SLAVE(s) which program to use (eg. hardfloat-linux-gnueabi-gcc). As the SLAVE(s) does not have such a program it will fail and furthermore will return to the HOST with an error message like:

distcc ERROR: compile on failed with exit code 110

In the following I am going to present my setup for distributed compilation with distcc, step by step. Note that it's valid only on Gentoo, for other Linux distros the process should be similar but not exactly the same:

- install the distcc on the HOST

- configure the distcc on the HOST

- install the cross-compiler on the SLAVE

- build " configure the cross-compiler on the SLAVE

- install the distcc on the SLAVE

- configure the distcc on the SLAVE

- if your HOST are on Gentoo Linux, configure the HOST's Portage to work with distcc

- compile the kernel while on R-Pi via distcc (not cross-compile, natively)

- does it worth it? good question!

1. Install the distcc on the HOST

Depending on the Linux distribution one might use, the installation of distcc could be done in several ways. The old way is via download+configure+make+install and it works every time :). However, if you would like to take the advantage of your distribution packaging tool then you should definitely use that, whatever it calls: apt, debian, portage, packman, rpm, etc. In Gentoo I use Portage and the installation of sys-devel/distcc package supposes to run the following command:

root@rpi-gentoo ~ $ emerge distcc

2. Configure the distcc on the HOST

If you plan to use your HOST as a slave for other systems that want to distribute their building work over the network, you should instruct your HOST system about how to run the distccd service. Edit the file /etc/conf.d/distccd and make sure it contains the following settings (note that these environment variables are well documented in your default configuration file):

DISTCC_VERBOSE="0"

DISTCCD_OPTS="--user nobody"

DISTCC_LOG="/var/log/distcc.log"

DISTCCD_EXEC="/usr/bin/distccd"

DISTCCD_PIDFILE="/var/run/distccd/distccd.pid"

DISTCCD_OPTS="${DISTCCD_OPTS} --port 3632"

DISTCCD_OPTS="${DISTCCD_OPTS} --log-level critical"

DISTCCD_OPTS="${DISTCCD_OPTS} --allow <others-ip/netmask>"

DISTCCD_OPTS="${DISTCCD_OPTS} -N 15"

We should inform the /usr/bin/distcc application what SLAVE to use when comes to distribute the compilation jobs across the network. For that we have to edit the file /etc/distcc/hosts as following:

... localhost

where SLAVE*-IP is either the ip or the host name for the SLAVE system(s) that will accept our distributed compiling jobs. We put the localhost at the end of the list in order to instruct distcc to distribute first on the network and only then to use the localhost CPU resources.

We want that whenever the C compiler is called on HOST, the distcc to "hijack" that call and to distribute that compiling job(s) to the configured distcc hosts. To achieve that we have to make two more adjustments:

- remove the original symlinks: /usr/lib/distcc/bin/{c++,cc,g++,gcc}

- create a wrapper script

- recreate those 4 symlinks to point to our newly created wrapper script

- adjust the global PATH variable such that distcc install directory to be the first one when the system is searching for C compilers

Run as root user the following commands:

DISTCC_BIN=/usr/lib/distcc/bin

GCC_WRAPPER=$DISTCC_BIN/`gcc -dumpmachine`-wrapper

rm $DISTCC_BIN/{c++,cc,g++,gcc}

cat << EOT > ${GCC_WRAPPER}

#!/bin/bash

exec ${DISTCC_BIN}/`gcc -dumpmachine`-g${0:$[-2]} "$@"

EOT

for prog in c++ cc g++ gcc;do

ln -s $GCC_WRAPPER $DISTCC_BIN/$prog

done

Edit the /etc/profile on your HOST system and add replace the "export PATH" line with the one below:

export PATH="/usr/lib/distcc/bin:${PATH}"

As you can see the distcc programs would be found first on the PATH in spite of the original gcc which comes later on the path. To activate this path right now, make sure to re-sync the environment global variable by running the following command:

root@rpi-gentoo ~ $ source /etc/profile

3. Install the cross-compiler on the SLAVE

Also, depending on your Linux distribution this step might have different approaches. On Gentoo, one might choose to install sys-devel/crossdev or sys-devel/ct-ng. Since I haven't succeeded with crossdev, in the following I will present only how I succeeded with ct-ng tool. If you want to install the ct-ng like in the old days then follow the instructions available at crosstool-ng.org . If your SLAVE is a Gentoo system then simply run:

user@x86_64-gentoo ~ $ emerge ct-ng

4. Build " configure the cross-compiler on the SLAVE

Since ct-ng is only a tool that automates the process of building a cross-compiler toolchain, it means that you don't have (yet) the cross-compiler needed to help the HOST with the (distributed) compilation tasks. When building a compiler for a target platform (such as ARM, for instance) you should know what's your target platform (here HOST). One simple way of finding that would be to look up on your HOST for its CC prefix:

user@x86_64-gentoo ~ $ gcc -dumpmachine

Let's suppose that the command above returned armv6j-hardfloat-linux-gnueabi. It means that we should look up for a ct-ng sample as close as possible to this prefix.

Now that we have an idea about the target platform we can ask ct-ng to show us its pre-configured list of samples (hopefully we'll find something similar with ours):

user@x86_64-gentoo ~ $ ct-ng list-samples

This would produce an output like:

user@x86_64-gentoo ~ $ ct-ng list-samples Status Sample name [G.X] alphaev56-unknown-linux-gnu [G.X] alphaev67-unknown-linux-gnu [G.X] arm-bare_newlib_cortex_m3_nommu-eabi [G.X] arm-cortex_a15-linux-gnueabi [G..] arm-cortex_a8-linux-gnueabi [G..] arm-davinci-linux-gnueabi [G..] arm-unknown-eabi [G..] arm-unknown-linux-gnueabi [G.X] arm-unknown-linux-uclibcgnueabi [G..] armeb-unknown-eabi [G.X] armeb-unknown-linux-gnueabi [G.X] armeb-unknown-linux-uclibcgnueabi [G.X] armv6-rpi-linux-gnueabi [G.X] avr32-unknown-none [G..] bfin-unknown-linux-uclibc [G..] i586-geode-linux-uclibc [G.X] i586-mingw32msvc,i686-none-linux-gnu [G.X] i686-nptl-linux-gnu [G.X] i686-unknown-mingw32 [G.X] m68k-unknown-elf [G.X] m68k-unknown-uclinux-uclibc [G.X] mips-ar2315-linux-gnu [G.X] mips-malta-linux-gnu [G..] mips-unknown-elf [G.X] mips-unknown-linux-uclibc [G.X] mips64el-n32-linux-uclibc [G.X] mips64el-n64-linux-uclibc [G..] mipsel-sde-elf [G..] mipsel-unknown-linux-gnu [G..] powerpc-405-linux-gnu [G..] powerpc-860-linux-gnu [G.X] powerpc-e300c3-linux-gnu [G.X] powerpc-e500v2-linux-gnuspe [G..] powerpc-unknown-linux-gnu [G..] powerpc-unknown-linux-uclibc [G..] powerpc-unknown_nofpu-linux-gnu [G.X] powerpc64-unknown-linux-gnu [G.X] s390-ibm-linux-gnu [G.X] s390x-ibm-linux-gnu [G..] sh4-unknown-linux-gnu [G..] x86_64-unknown-linux-gnu [G..] x86_64-unknown-linux-uclibc [G.X] x86_64-unknown-mingw32 L (Local) : sample was found in current directory G (Global) : sample was installed with crosstool-NG X (EXPERIMENTAL): sample may use EXPERIMENTAL features B (BROKEN) : sample is currently broken

The sample (with bold) above looks pretty good to me since the HOST and the sample listed above represents the same CPU architecture (armv6) and they both are compatible with the GNU EABI (cool!). If you want to know what library it would install if you would choose that profile, run the following command:

user@x86_64-gentoo ~ $ ct-ng show-armv6-rpi-linux-gnueabi

[G.X] armv6-rpi-linux-gnueabi

OS : linux-3.6.11

Companion libs : gmp-5.0.2 mpfr-3.1.0 ppl-0.11.2 cloog-ppl-0.15.11 mpc-0.9

binutils : binutils-2.22

C compiler : gcc-linaro-4.7-2013.01 (C,C++)

C library : eglibc-2_16 (threads: nptl)

Tools

Once you've decided configure the ct-ng to use that sample/profile. It would be configured by default at ${HOME}/x-tools/armv6-rpi-linux-gnueabi. Note that you should run this commands with a regular user (not root!):

user@x86_64-gentoo ~ $ ct-ng armv6-rpi-linux-gnueabi

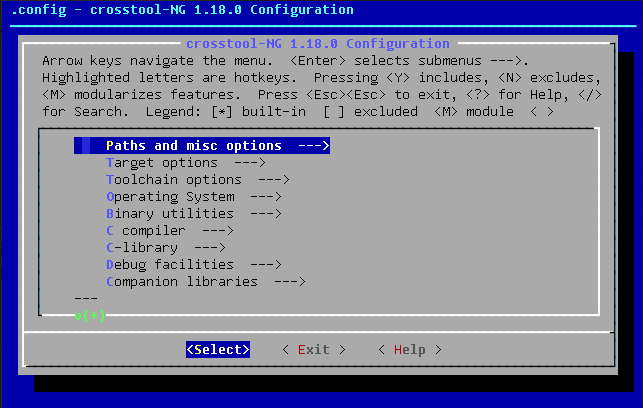

If you want to fine-tune this configuration via a ncurses menu (like the one you have when you are configuring the Linux kernel) then run also this command:

user@x86_64-gentoo ~ $ ct-ng menuconfig

The menu will looks like the one below (that's why I prefer this tool rather than others, plus that it works!):

When ready you can start building the cross-compiler toolchain as following (note that it will take a while, like dozens of minutes):

user@x86_64-gentoo ~ $ ct-ng build

If you want to use this compiler at the HOST level, outside of the distcc scope, then make sure that your shell environment PATH variable gets updated with the path of this new toolkit. Either edit your ~/.profile or the global /etc/profile file and add the following lines, then run either "source ~/.profile" or "source /etc/profile" to update your local/global PATH environment variable:

CROSS_ROOT="${HOME}/x-tools/armv6-rpi-linux-gnueabi" #if you use this in /etc/profile then use absolute path instead $HOME variable

CROSS_PATH="${CROSS_ROOT}/bin:${CROSS_ROOT}/libexec/gcc/armv6-rpi-linux-gnueabi/4.7.3:${CROSS_ROOT}/armv6-rpi-linux-gnueabi/bin"

export PATH="${CROSS_PATH}:${PATH}"

In order to allow distcc to use this toolkit, you must set the distcc environment PATH variable in the /etc/conf.d/distcc file (check my comments here).

Note: replace the 4.7.3 version above with the real one that fits your build.

Because the SLAVE cross-compiler CC prefix is different than the one on our HOST (i.e. armv6-rpi-linux-gnueabi vs. armv6j-hardfloat-linux-gnueabi), when the HOST will ask the SLAVE to compile a with the program armv6j-hardfloat-linux-gnueabi-* the SLAVE will fail and will return in exchange the error shown at the beginning (code 110).

The solution for this would be to create the following symlinks on the SLAVE:

HOST_PREFIX=armv6j-hardfloat-linux-gnueabi

SLAVE_PREFIX=armv6-rpi-linux-gnueabi

CROSS_BIN=${HOME}/x-tools/armv6-rpi-linux-gnueabi/bin

for prog in c++ cc g++ gcc;do

ln -s $CROSS_BIN/$SLAVE_PREFIX-$prog $CROSS_BIN/$HOST_PREFIX-$prog

done

Now, every time that the HOST will ask the SLAVE to compile a using the armv6j-hardfloat-linux-gnueabi tool, the SLAVE will obey and cheat in the same time, because it will redirect that command to the local installed cross-compiler (eg. armv6-rpi-linux-gnueabi). If you want to test your new installed ARM cross-compiler just grab a "Hello world" C program and compile it locally like below (hopefully output.o is created, everybody's happy):

armv6-rpi-linux-gnueabi-gcc -c -o output.o source.c

You should also read the installation/usage instruction on crosstool-ng.org website.

5. Install the distcc on the SLAVE

Repeat the same procedure like in the step 1 above.

6. Configure the distcc on the SLAVE

Edit the file /etc/conf.d/distccd and make sure it contains the following settings (note that these environment variables are well documented in your default configuration file):

DISTCC_VERBOSE="0"

DISTCCD_OPTS="--user nobody"

DISTCCD_OPTS="$DISTCCD_OPTS} -j X"

DISTCC_LOG="/var/log/distcc.log"

DISTCCD_EXEC="/usr/bin/distccd"

DISTCCD_PIDFILE="/var/run/distccd/distccd.pid"

DISTCCD_OPTS="${DISTCCD_OPTS} --port 3632"

DISTCCD_OPTS="${DISTCCD_OPTS} --log-level critical"

DISTCCD_OPTS="${DISTCCD_OPTS} --allow <HOST-ip/netmask>"

DISTCCD_OPTS="${DISTCCD_OPTS} -N 15"

CROSS_ROOT="/home/<your-user>/x-tools/armv6-rpi-linux-gnueabi"

CROSS_PATH="${CROSS_ROOT}/bin:${CROSS_ROOT}/libexec/gcc/armv6-rpi-linux-gnueabi/4.7.3:${CROSS_ROOT}/armv6-rpi-linux-gnueabi/bin"

PATH=$CROSS_PATH:${PATH}

Note: adjust the 4.7.3 version with the real one that fits your build. Replace also the X with the number of cores that your SLAVE can provide. To make sure that the SLAVE is busy while the R-Pi HOST is struggling with its IO bottleneck I set this at least 2-5 times more than the number of cores of the SLAVE system. Make sure that the HOST also will attempt to use all those available sockets (if you run make manually make sure you use the -j switch, too).

Make sure that you restart the distccd service after these changes.

7. Configure the HOST Gentoo's Portage with distcc

Portage knows to take advantage of the distcc tool out of the box. To activate the usage of distcc we should edit the /etc/make.conf (or /etc/portage/make.conf nowadays) and to configure the following options:

FEATURES="${FEATURES} distcc"

MAKEOPTS="-jX"

DISTCC_HOSTS="SLAVE1 SLAVE2 ... localhost"

Note: the X above represents the total number of CPU of your localhost + SLAVE1 + SLAVE2 + ... and the SLAVE* represents the ip or the host name for those SLAVE system(s) where the HOST will distribute the compilation jobs.

8. Compile the kernel while on R-Pi via distcc

Although one might (cross)compile the kernel on a powerful system and later deploy kernel image on the R-Pi SD-CARD, it's also possible to compile the kernel while running the R-Pi by the R-Pi itself.

Using the distcc as described above will not work out of the box because the Linux source Makefile is insisting to work with gcc/g++ and therefore local (HOST) distcc will get the request to distribute the compilation of some kernel file by the remote gcc/g++ installed on the SLAVE (instead of some cross-compiler armv6-* on the SLAVE).

Because at the SLAVE level runs your SLAVE architecture gcc (eg.: x86_64-pc-linux-gnu-*), obviously it will compile every source received from the R-Pi distcc using that native compiler, resulting a native SLAVE object binary (that runs only on SLAVE architecture, such as x86_64) instead a binary compiled for ARM architecture that is understandable by a armv6j cpu.

Although there might be many other solutions, one that I came up with and which does not alter the previous(1-7) configuration, is to disable temporary the gcc on the SLAVE. On my SLAVE (x86_64) system I just renamed (temporarily) the /usr/x86_64-pc-linux-gnu folder (/usr/x86_64-pc-linux-gnu.old) and after the kernel compilation end I renamed back as it was before. It's a dirty solution, I know, but till I find a better solution I have to leave with that.

Note: when compiling the kernel on R-Pi don't forget to make use of -jX switch, where X is the number of cores of your SLAVE + 1.

Does it worth all this effort?

Does it worth it?

To answer that question I've tried to compile the Linux kernel directly from R-Pi (@700MHz, no overclocking), with and without distcc , having the help of an HP workstation with a Core 2 Duo CPU E8400 @ 3.00GHz and 4G RAM (see the [!] below):

- make -j10 with distcc (-j10) on (HP) SLAVE: 4080 seconds

- make without distcc (only 1 job on R-Pi): 10524 seconds

- The HOST /etc/distcc/hosts defines the following: SLAVE-IP/10,lzo --localslots=1

The bottom line:  i.e. with only one SLAVE did the distcc compilation succeeded 2.6 times faster. If you are adding other SLAVEs to this setup, they will definitely make the process faster. This result can be even improved if you are going to play a little with distcc host specifications -LIMIT option (see distcc manual).

i.e. with only one SLAVE did the distcc compilation succeeded 2.6 times faster. If you are adding other SLAVEs to this setup, they will definitely make the process faster. This result can be even improved if you are going to play a little with distcc host specifications -LIMIT option (see distcc manual).

[!] The above compilation was done with this kernel .config file. If you choose to use it then remember to remove the CONFIG_CROSS_COMPILE parameter as it was not meant to be used in this project but rather in this one.

In another test I've used other kernel .config which is even more cut-down than the original one (I've eliminated the sound, wireless, USB support for other things than keyboard, mouse, storage, cryptography algorithms not used, NLS pages not used, etc) and I've done a similar test as above, as following:

- make -j4 with distcc (-j10) on (HP) SLAVE: 3028 seconds

- make without distcc (only 1 job on R-Pi): 8035 seconds

- The HOST /etc/distcc/hosts defines the following: SLAVE-IP/4 --localslots=1

So basically, if you use your R-Pi only for some experiments (not as gaming/media console) and if you use this simplified kernel configuration, it's possible to compile the R-Pi kernel from R-Pi itself in 2h (without any extra-help) or less or equal than 50 minutes with help of distcc distributed compilation.

Final note

The process described above does not apply only to ARM vs X86-* architecture. A setup like this should also work when you have an old/slow i386 PC and a powerful X86-* system and you want to distribute the compilation across the network such the i386 get a hand from its network fellows. Working with heterogeneous environments it's a science, but not an exact science. You have to grab the bull by the horns, sometime you fix a problem after many trials and errors, a hard job but somebody have to do it. It worths reading this blog, too: http://rostedt.homelinux.com/distcc/.

Now, if you think that this article was interesting don't forget to rate it. It shows me that you care and thus I will continue write about these things.

Eugen Mihailescu

Latest posts by Eugen Mihailescu (see all)

- Dual monitor setup in Xfce - January 9, 2019

- Gentoo AMD Ryzen stabilizator - April 29, 2018

- Symfony Compile Error Failed opening required Proxies - January 22, 2018

humm, very hard to read an apply. Why use host and slave and not rapbberry and server ? Why use script and sy type this : ? Why use a sample architecture what is not x86_64 in the sample ? It's exactly what Iwant to to but a simple list of exact commands should be perfect.

PS : sometime, to do something, you just need a tool and don't need to understand everything on it 😉